Google Adds AI Language Skills to Alphabet’s Helper Robots to Better Understand Humans, by James Vincent

“Google’s parent company Alphabet is bringing together two of its most ambitious research projects — robotics and AI language understanding — in an attempt to make a ‘helper robot’ that can understand natural language commands.”

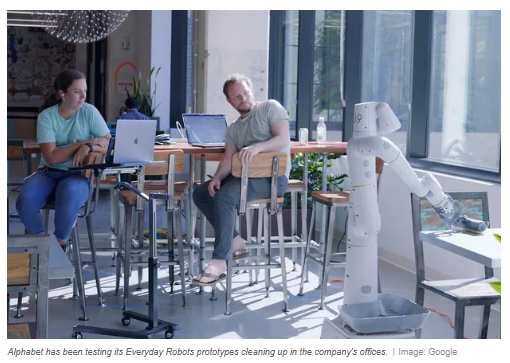

“Since 2019, Alphabet [has] been developing robots that can carry out simple tasks like fetching drinks and cleaning surfaces. This Everyday Robots project is still in its infancy — the robots are slow and hesitant — but the bots have now been given an upgrade: improved language understanding courtesy of Google’s large language model (LLM) PaLM.”

“Most robots only respond to short and simple instructions, like ‘bring me a bottle of water.’ But LLMs like GPT-3 and Google’s MuM are able to better parse the intent behind more oblique commands. In Google’s example, you might tell one of the Everyday Robots prototypes ‘I spilled my drink, can you help?’ The robot filters this instruction through an internal list of possible actions and interprets it as ‘fetch me the sponge from the kitchen.”’

“Yes, it’s kind of a low bar for an ‘intelligent’ robot, but it’s definitely still an improvement! What would be really smart would be if that robot saw you spill a drink, heard you shout ‘gah oh my god my stupid drink’ and then helped out.”