“Kinetix, a Paris-based AI-assisted 3D animation platform, today announced it has secured $11 million in a funding round. Kinetix, which was founded in 2020, has built a no-code platform that allows Web3 and content creators to transform any video into 3D animated avatars. Millions of creators around the world may now access 3D creation using the company’s free AI-powered platform, according to the company’s press release.”

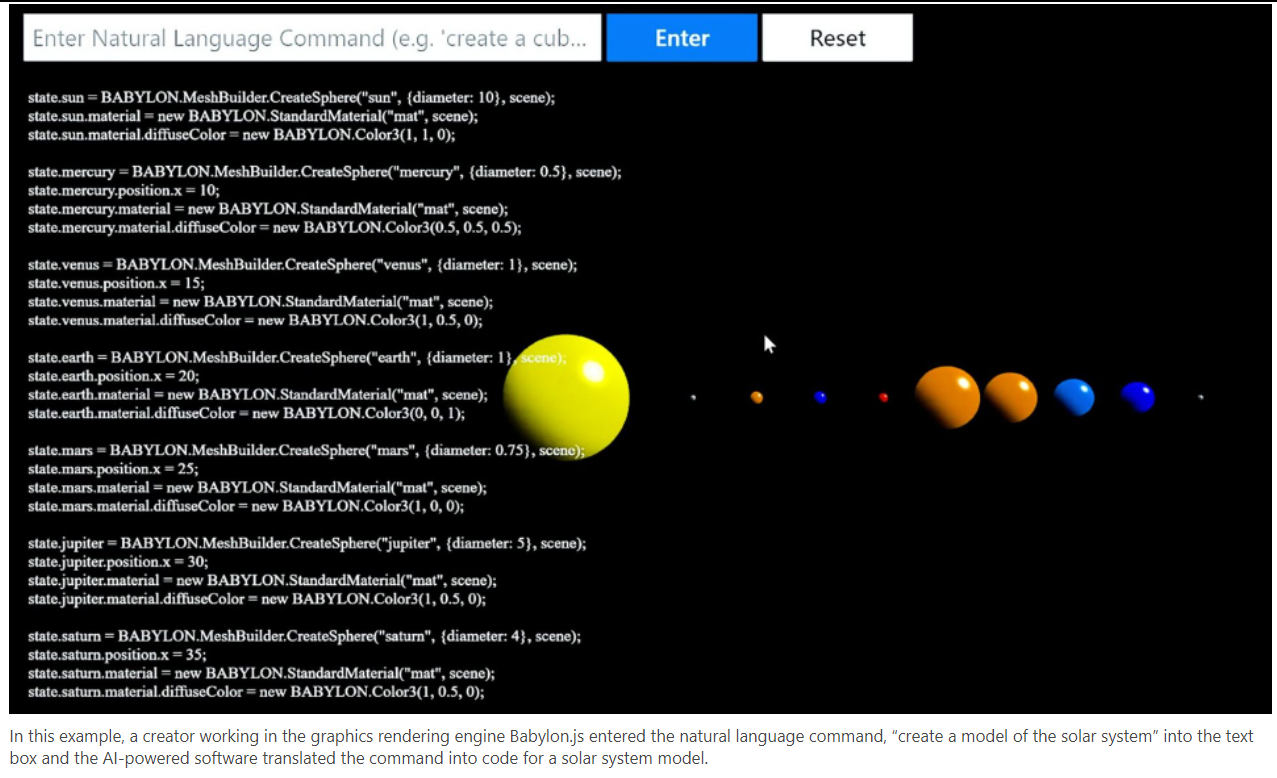

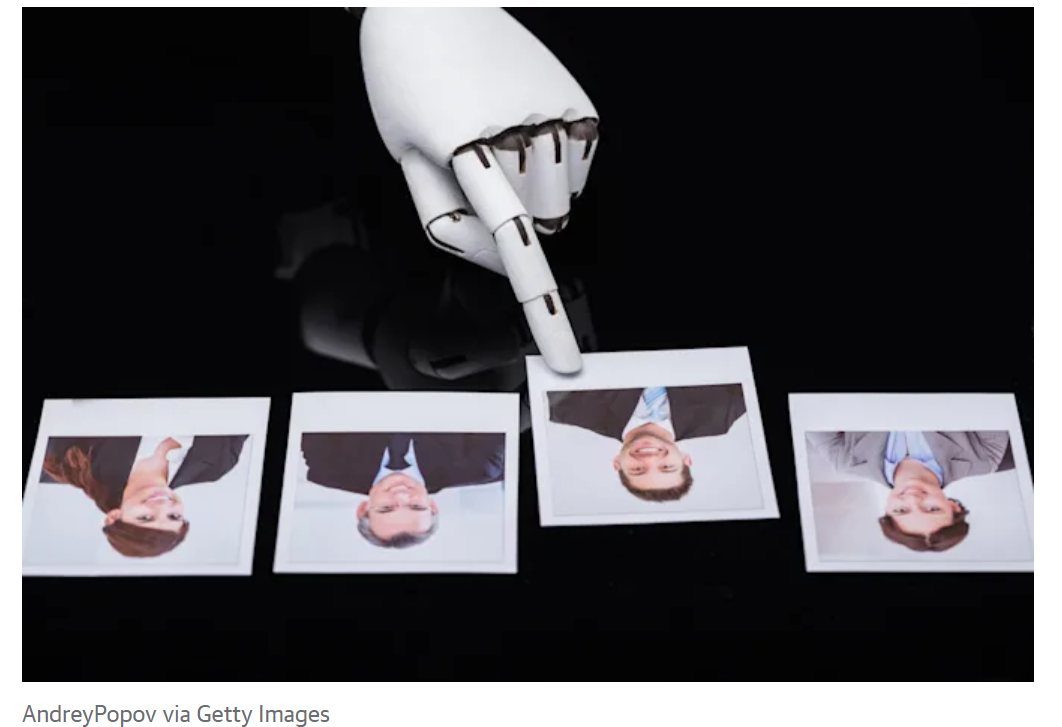

“No-code AI is a category in the AI landscape that tries to make AI more accessible to everyone. When it comes to AI and machine learning models, no-code AI refers to the use of a no-code development platform with a visual, code-free and frequently drag-and-drop interface. No-code AI enables the creation of AI models without the need for specially trained individuals. Since there is currently a scarcity of data science talent in the market, organizations can benefit from this greatly.”

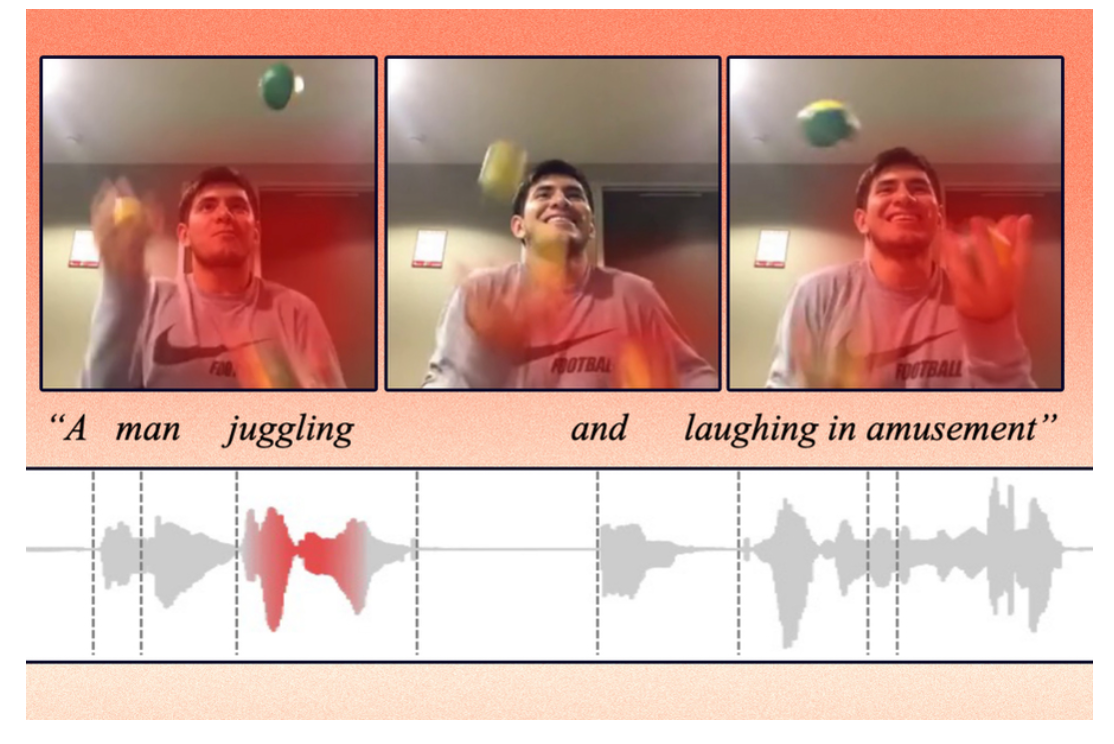

“With the Kinetix platform, everybody can generate fully featured 3D animations to share and integrate into virtual worlds, by uploading video clips into the Kinetix platform. This opens up new avenues for self-expression in the metaverse. It then goes on to make the metaverse more engaging, similar to a video game, rather than a passive experience, like a movie. Instead of content created for consumption by media and advertising organizations, it will mainly incorporate user-generated content (UGC).”

“Given the rapid rise of virtual worlds, there is a demand for simple platforms for creating 3D content and monetizing it. That’s where Kinetix’s AI-powered, no-code technology comes in. Kinetix is designed to allow creators to upload snippets of video, integrate assets from the Kinetix library, which includes assets like Adobe Mixamo and Ready Player Me, choose their design and have a fully rendered 3D identity in no time!”

“‘In the metaverse, we provide a platform to foster user-generated content. We enable millions of people to create their own animated avatars and express their individuality in free and exciting ways, by making creation simple and quick,’ said Yassine Tahi, cofounder and CEO of Kinetix.”

Click here for the full article