BLOG

Artificial Intelligence System Learns Concepts Shared Across Video, Audio, and Text, by Adam Zewe

“Humans observe the world through a combination of different modalities, like vision, hearing, and our understanding of language. Machines, on the other hand, interpret the world through data that algorithms can process.”

“So, when a machine ‘sees’ a photo, it must encode that photo into data it can use to perform a task like image classification. This process becomes more complicated when inputs come in multiple formats, like videos, audio clips, and images.”

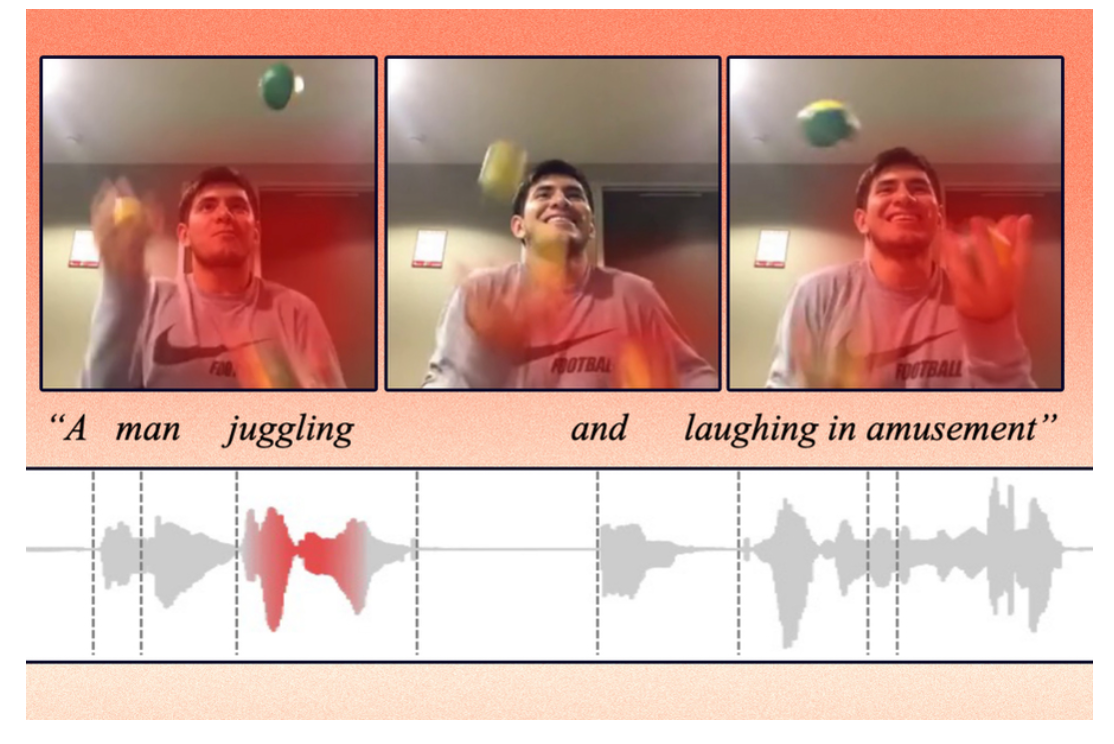

“‘The main challenge here is, how can a machine align those different modalities? As humans, this is easy for us. We see a car and then hear the sound of a car driving by, and we know these are the same thing. But for machine learning, it is not that straightforward,’ says Alexander Liu, a graduate student in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and first author of a paper tackling this problem.”

“The researchers focus their work on representation learning, which is a form of machine learning that seeks to transform input data to make it easier to perform a task like classification or prediction.”

“The representation learning model takes raw data, such as videos and their corresponding text captions, and encodes them by extracting features, or observations about objects and actions in the video. Then it maps those data points in a grid, known as an embedding space. The model clusters similar data together as single points in the grid. Each of these data points, or vectors, is represented by an individual word.”

Kinetix Uses No-Code AI to Create 3D Animations, Secures $11M, by Kolawole Samuel Adebayo

“Kinetix, a Paris-based AI-assisted 3D animation platform, today announced it has secured $11 million in a funding round. Kinetix, which was founded in 2020, has built a no-code platform that allows Web3 and content creators to transform any video into 3D animated avatars. Millions of creators around the world may now access 3D creation using the company’s free AI-powered platform, according to the company’s press release.”

“No-code AI is a category in the AI landscape that tries to make AI more accessible to everyone. When it comes to AI and machine learning models, no-code AI refers to the use of a no-code development platform with a visual, code-free and frequently drag-and-drop interface. No-code AI enables the creation of AI models without the need for specially trained individuals. Since there is currently a scarcity of data science talent in the market, organizations can benefit from this greatly.”

“With the Kinetix platform, everybody can generate fully featured 3D animations to share and integrate into virtual worlds, by uploading video clips into the Kinetix platform. This opens up new avenues for self-expression in the metaverse. It then goes on to make the metaverse more engaging, similar to a video game, rather than a passive experience, like a movie. Instead of content created for consumption by media and advertising organizations, it will mainly incorporate user-generated content (UGC).”

“Given the rapid rise of virtual worlds, there is a demand for simple platforms for creating 3D content and monetizing it. That’s where Kinetix’s AI-powered, no-code technology comes in. Kinetix is designed to allow creators to upload snippets of video, integrate assets from the Kinetix library, which includes assets like Adobe Mixamo and Ready Player Me, choose their design and have a fully rendered 3D identity in no time!”

“‘In the metaverse, we provide a platform to foster user-generated content. We enable millions of people to create their own animated avatars and express their individuality in free and exciting ways, by making creation simple and quick,’ said Yassine Tahi, cofounder and CEO of Kinetix.”

Researchers Develop VR Headset With Mouth Haptics, by David Matthews

“Whether we like it or not, the metaverse is coming — and companies are trying to make it as realistic as possible. To that end, researchers from Carnegie Mellon University have developed haptics that mimic sensations around the mouth.”

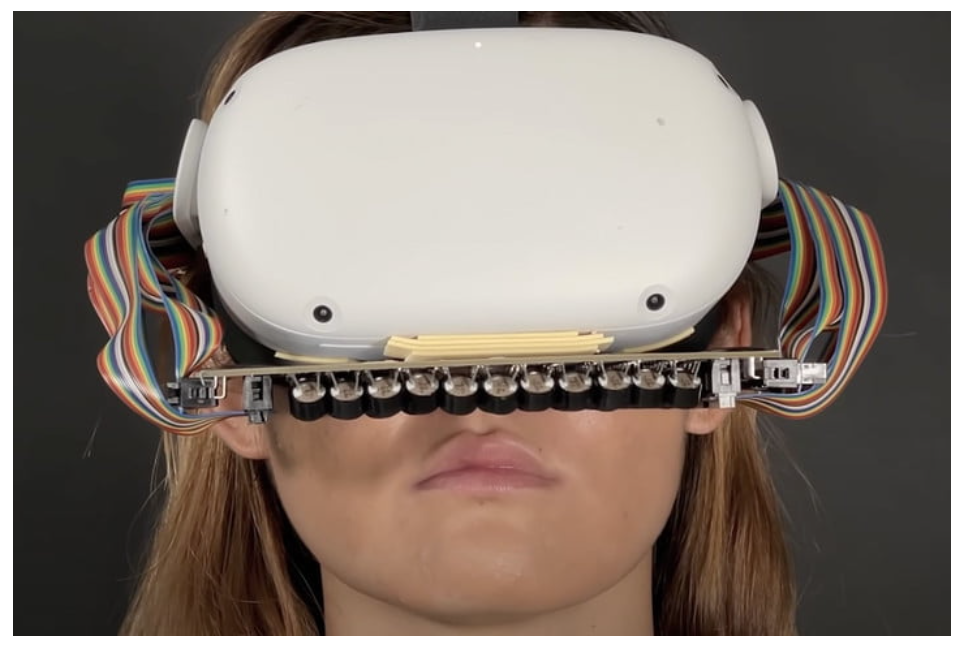

“The Future Interfaces Group at CMU created a haptic device that attaches to a VR headset. This device contains a grid of ultrasonic transducers that produce frequencies too high for humans to hear. However, if those frequencies are focused enough, they can create pressure sensations on the skin.”

“The mouth was chosen as a test bed because of how sensitive the nerves are. The team of researchers created combinations of pressure sensations to simulate different motions. These combinations were added to a basic library of haptic commands for different motions across the mouth.”

“To demonstrate the haptic device as a proof of concept, the team tested it on a small group of volunteers. The volunteers strapped on VR goggles (along with the mouth haptics) and went through a series of virtual worlds such as a racing game and a haunted forest.”

“The volunteers were able to interact with various objects in the virtual worlds like feeling spiders go across their mouths or the water from a drinking fountain. Shen noted that some volunteers instinctively hit their faces as they felt the spider ‘crawling’ across their mouths.”

Amazon Is Hiring to Build an “Advanced” and “Magical” AR/VR Product, by Samuel Axon

“Amazon plans to join other tech giants like Apple, Google, and Meta in building its own mass-market augmented reality product, job listings discovered by Protocol suggest.”

“The numerous related jobs included roles in computer vision, product management, and more. They reportedly referenced ‘XR/AR devices’ and ‘an advanced XR research concept.’ Since Protocol ran its report on Monday, several of the job listings referenced have been taken down, and others have had specific language about products removed.”

“Google, Microsoft, and Snap have all released various AR wearables to varying degrees of success over the years, and they seem to be still working on future products in that category. Meanwhile, it's one of the industry's worst-kept secrets that Apple employs a vast team of engineers, researchers, and more who are working on mixed reality devices, including mass-market consumer AR glasses. And Meta (formerly Facebook) has made its intentions to focus on AR explicitly clear over the past couple of years.”

"It's not all that surprising that Amazon is chasing the same thing. As Protocol notes, Amazon launched a new R&D group led by Kharis O'Connell, an executive who has previously worked on AR products at Google and elsewhere.”

“But Amazon's product might not be the same kind of product that we know Meta and Apple have focused on; it might not be a wearable at all. Some of Amazon's job listings refer to it as a "smart home" device. And Amazon is among the tech companies that have experimented with room-scale projection and holograms instead of wearables for AR.”

Deep Science: Combining Vision and Language Could Be the Key to More Capable AI, by Kyle Wiggers

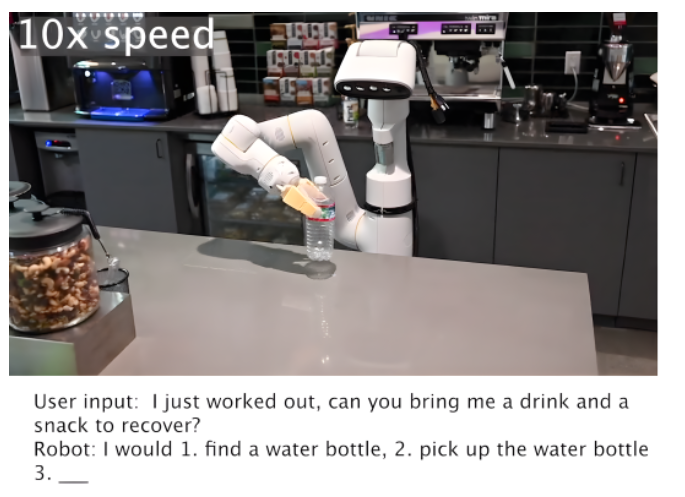

“Depending on the theory of intelligence to which you subscribe, achieving ‘human-level’ AI will require a system that can leverage multiple modalities — e.g., sound, vision and text — to reason about the world. For example, when shown an image of a toppled truck and a police cruiser on a snowy freeway, a human-level AI might infer that dangerous road conditions caused an accident. Or, running on a robot, when asked to grab a can of soda from the refrigerator, they’d navigate around people, furniture and pets to retrieve the can and place it within reach of the requester.”

“Today’s AI falls short. But new research shows signs of encouraging progress, from robots that can figure out steps to satisfy basic commands (e.g., ‘get a water bottle’) to text-producing systems that learn from explanations. In this revived edition of Deep Science, our weekly series about the latest developments in AI and the broader scientific field, we’re covering work out of DeepMind, Google and OpenAI that makes strides toward systems that can — if not perfectly understand the world — solve narrow tasks like generating images with impressive robustness.”

“AI research lab OpenAI’s improved DALL-E, DALL-E 2, is easily the most impressive project to emerge from the depths of an AI research lab. As my colleague Devin Coldewey writes, while the original DALL-E demonstrated a remarkable prowess for creating images to match virtually any prompt (for example, ‘a dog wearing a beret’), DALL-E 2 takes this further. The images it produces are much more detailed, and DALL-E 2 can intelligently replace a given area in an image — for example inserting a table into a photo of a marbled floor replete with the appropriate reflections.”

“Another component is language understanding, which lags behind in many aspects — even setting aside AI’s well-documented toxicity and bias issues”

“In a new study, DeepMind researchers investigate whether AI language systems — which learn to generate text from many examples of existing text (think books and social media) — could benefit from being given explanations of those texts.”

From Virtual Reality Afterlife Games to Death Doulas: Is Our View of Dying Finally Changing?, by Sara Moniuszko

“Everyone dies. And while it will happen to all of us, we rarely talk about it with ease. But that's starting to change, from video games about the afterlife to TV shows that help prepare you to pass.”

“Although death remains a painful and mysterious part of life, experts say new technologies, grieving options and professions related to end-of-life care are shifting society's comfort levels around discussing it.”

“‘If we don't talk about (death and loss), we're basically ignoring it. By ignoring this fact, it only serves to deepen the pain,’ explains Ron Gura, co-founder and CEO of Empathy, a platform that helps families navigate the death of loved ones.”

“We use apps every day to connect with friends and order food, but we didn't have one to help us through challenging moments like loss and grief – until now.”

“Technology can not only help people through the process of loss, but also spark conversations around death, explains Dr. Candi Cann, Baylor University death scholar and researcher.”

“She's been tracking the rise of the intersection of mourning and gaming, pointing to a virtual reality game released last year called ‘Before Your Eyes,’ which shows the perspective of a soul's journey on its way to the afterlife.”

VR Role-Play Therapy Helps People With Agoraphobia, Finds Study, by Nicola Davis

“It’s a sunny day on a city street as a green bus pulls up by the kerb. Onboard, a handful of passengers sit stony-faced as you step up to present your pass. But you cannot see your body – only a floating pair of blue hands.”

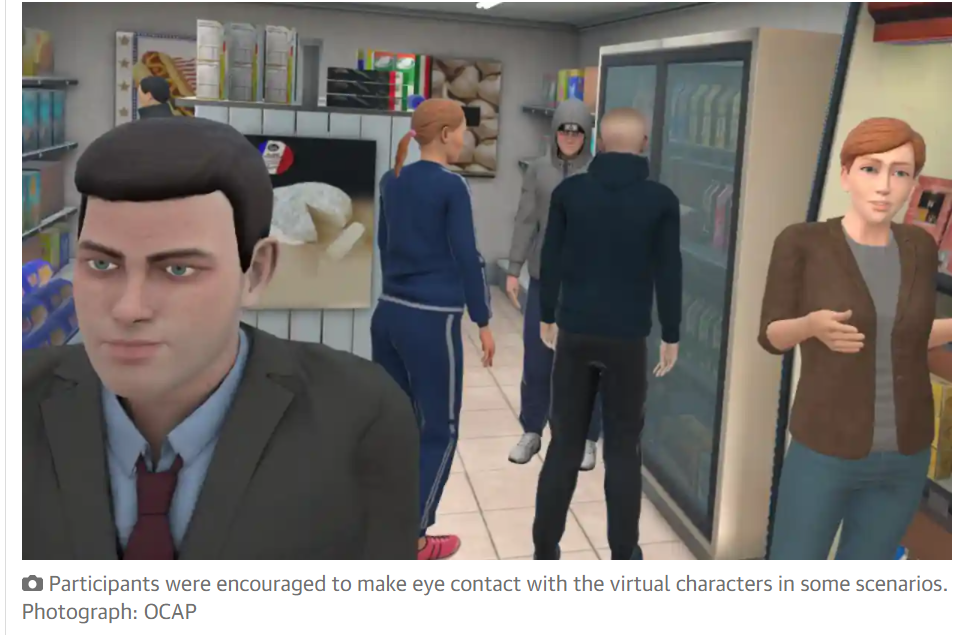

“It might sound like a bizarre dream, but the scenario is part of a virtual reality (VR) system designed to help people with agoraphobia – those for whom certain environments, situations and interactions can cause intense fear and distress.”

“Scientists say the approach enables participants to build confidence and ease their fears, helping them to undertake tasks in real life that they had previously avoided. The study also found those with more severe psychological problems benefited the most.”

“The VR experience begins in a virtual therapist’s office before moving to scenarios such as opening the front door or being in a doctor’s surgery, each with varying levels of difficulty. Participants are asked to complete certain tasks, such as asking for a cup of coffee, and are encouraged to make eye contact or move closer to other characters.”

Virtual Reality and Motor Imagery With PT Ease Motor Symptoms, by Lindsey Shapiro

“Combining a 12-week program of virtual reality (VR) training and motor imagery exercises with standard physical therapy (PT) significantly lessened motor symptoms — including tremors, slow movements (bradykinesia), and postural instability — among people with Parkinson’s disease, according to a recent study.”

“‘To the best of our knowledge, this is the first trial to show the effects of … virtual games and [motor imagery] along with routine PT on the components of motor function such as tremors, posture, gait, body bradykinesia, and postural instability in [Parkinson’s disease] patients,’ the researchers wrote.”

“Recent evidence suggests that virtual reality training — using video game systems — may be a highly effective supplement to physical therapy, with the ability to improve motor learning and brain function. VR training also has been shown to boost attention, self-esteem, and motivation, as well as increase levels of the brain’s reward chemical, dopamine, which may increase the likelihood of participation and therapy adherence.”

“Motor imagery training, known as MI, is a process in which participants imagine themselves performing a movement without actually moving or tensing the muscles involved in the movement. It is thought that such activity strengthens the brain’s motor cortex, and also may be a promising therapeutic approach in Parkinson’s.”

“Now, a team of researchers in Pakistan examined whether a combined approach of VR and MI could lessen disease symptoms in people with Parkinson’s. Patients ages 50 to 80, with idiopathic Parkinson’s — whose disease is of unknown cause — were recruited from the Safi Hospital in Faisalabad.”

“Despite also being limited by a small sample size, the results overall suggest that VR together with MI training and routine physical therapy ‘might be the most effective in treating older adults with mild-to-moderate [Parkinson’s disease] stages,’ researchers concluded.”

Museum Launches Dead Sea NFT Photo Collection On World Water Day, By Simona Shemer

“The Dead Sea Museum, a physical art museum planned to be built in the city of Arad, has launched its first NFT collection of Dead Sea photographs by environmental – art activist Noam Bedein, founder of the Dead Sea Revival Project.”

“The collection of 100 selected images, dubbed Genesis NFT, highlights the disappearing beauty of the Dead Sea in order to raise environmental awareness for the world wonder, a statement from the museum said.”

“Bedein is the first to document the Dead Sea World Heritage Site solely by boat and has a database of over 25,000 photographs from the past six years, showing fascinating hidden layers exposed at the lowest point on Earth, and revealed due to the drop in sea level, which is currently at its lowest level in recorded history.”

“The auction will start on March 22nd, which is World Water Day, the annual observance established by the United Nations that highlights the importance of freshwater and advocates for its sustainable management. It will end on April 22, which is Earth Day. All proceeds from the sale will be used for the museum planning and for legal and legislative efforts to restore water to the Dead Sea.”

“Preservation of the Dea Sea is important because ‘it holds a wealth of resources that help people and the planet, and it is deeply rooted in the vast history of this land and the people of Israel,’ Fruchter explains.”

Want to Make Robots Run Faster? Try Letting AI Take Control, by James Vincent

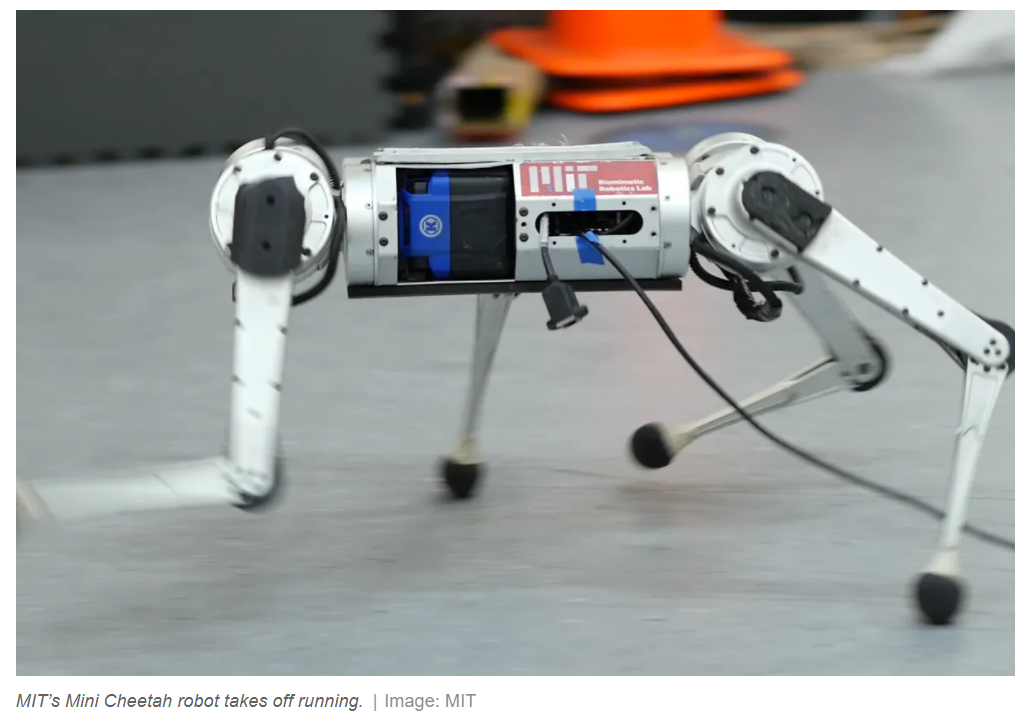

“Quadrupedal robots are becoming a familiar sight, but engineers are still working out the full capabilities of these machines. Now, a group of researchers from MIT says one way to improve their functionality might be to use AI to help teach the bots how to walk and run.”

“Usually, when engineers are creating the software that controls the movement of legged robots, they write a set of rules about how the machine should respond to certain inputs. So, if a robot’s sensors detect x amount of force on leg y, it will respond by powering up motor a to exert torque b, and so on. Coding these parameters is complicated and time-consuming, but it gives researchers precise and predictable control over the robots.”

“An alternative approach is to use machine learning — specifically, a method known as reinforcement learning that functions through trial and error. This works by giving your AI model a goal known as a ‘reward function’ (e.g., move as fast as you can) and then letting it loose to work out how to achieve that outcome from scratch. This takes a long time, but it helps if you let the AI experiment in a virtual environment where you can speed up time. It’s why reinforcement learning, or RL, is a popular way to develop AI that plays video games.”

“Margolis and Yang say a big advantage of developing controller software using AI is that it’s less time-consuming than messing about with all the physics. ‘Programming how a robot should act in every possible situation is simply very hard. The process is tedious because if a robot were to fail on a particular terrain, a human engineer would need to identify the cause of failure and manually adapt the robot controller,’ they say.”