BLOG

No, Google’s AI Is Not Sentient, by Rachel Metz

“Tech companies are constantly hyping the capabilities of their ever-improving artificial intelligence. But Google was quick to shut down claims that one of its programs had advanced so much that it had become sentient.”

“According to an eye-opening tale in the Washington Post on Saturday, one Google engineer said that after hundreds of interactions with a cutting edge, unreleased AI system called LaMDA, he believed the program had achieved a level of consciousness.”

“In interviews and public statements, many in the AI community pushed back at the engineer's claims, while some pointed out that his tale highlights how the technology can lead people to assign human attributes to it. But the belief that Google's AI could be sentient arguably highlights both our fears and expectations for what this technology can do.”

How AI Will Change the Future of Search Engine Optimization, by SEO Vendo

“As artificial intelligence (AI) becomes more sophisticated, search engine optimization will have to adapt. AI can already analyze data at a rate that humans can’t, so it’s only a matter of time until it starts to dominate SEO strategies.”

“The SEO industry is always in a state of flux. Google is constantly changing its algorithms, and new technologies are emerging all the time. Ever since Google’s announcement in 2015 that they would be using RankBrain, an artificial intelligence (AI) system, to help process search results, the SEO community has been discussing the impact that AI will have on the industry.”

“RankBrain is not the only AI system that Google is using. In 2017, they announced that they were also using machine learning to fight spam.”

“Since Google’s Search Liaison, Danny Sullivan, confirmed in 2019 that RankBrain was now being used for every search query, several companies have started to experiment with AI and machine learning to try and get ahead of the curve. This gave rise to a whole new plethora of AI-based SEO tools. Some of the prominent ways in which AI tools have proven their effectiveness are SEO keyword research, content creation, traffic and site growth analysis, voice search, and SEO workflows.”

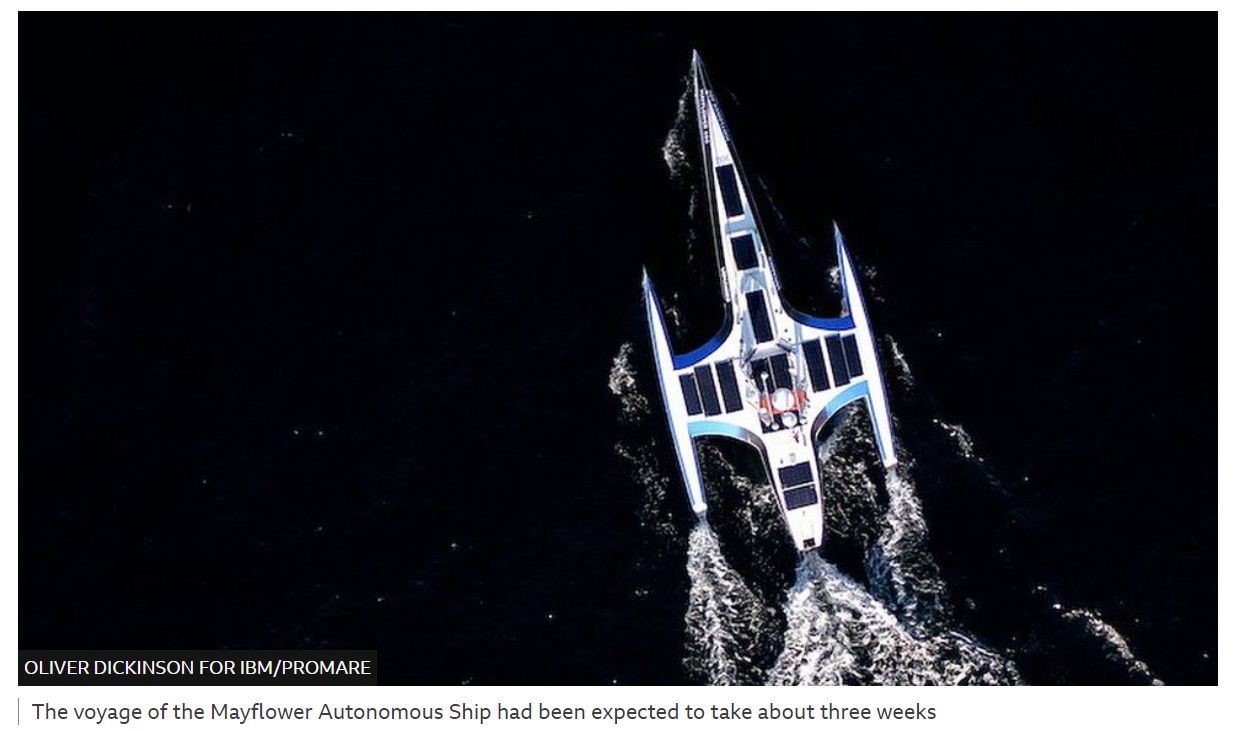

AI-Driven Robot Boat Mayflower Crosses Atlantic Ocean, by BBC News

“A crewless ship designed to recreate the Mayflower's historic journey across the Atlantic 400 years ago has crossed the ocean, project bosses have said.”

“The Mayflower Autonomous Ship (MAS) completed a 2,700-mile (4,400km) trip from Plymouth in the UK to Halifax in Nova Scotia, Canada, on Sunday.”

“It had been due to go to Massachusetts in the USA, but was diverted to Canada to investigate issues it had at sea.”

“The craft was likely to stay in Halifax for a week or two, managers said.”

“The 50ft (15m) long solar-powered trimaran is capable of speeds of up to 10 knots (20km/h) and was navigated by on-board artificial intelligence (AI) created by IBM with information from six cameras and 50 sensors.”

“It was created to show the development of technology in the centuries since the Pilgrim Fathers set sail for the New World, bosses said.”

Artificial General Intelligence Is Not as Imminent as You Might Think, By Gary Marcus

“To the average person, it must seem as if the field of artificial intelligence is making immense progress.”

“Don't be fooled. Machines may someday be as smart as people, and perhaps even smarter, but the game is far from over. There is still an immense amount of work to be done in making machines that truly can comprehend and reason about the world around them. What we really need right now is less posturing and more basic research.”

“To be sure, there are indeed some ways in which AI truly is making progress—synthetic images look more and more realistic, and speech recognition can often work in noisy environments—but we are still light-years away from general purpose, human-level AI that can understand the true meanings of articles and videos, or deal with unexpected obstacles and interruptions. We are still stuck on precisely the same challenges that academic scientists (including myself) having been pointing out for years: getting AI to be reliable and getting it to cope with unusual circumstances.”

China Floats First-Ever AI-Powered Drone Mothership, by Gabriel Honrada

“China has launched the world’s first artificial intelligence-operated drone carrier, an unmanned maritime mothership that can be used for maritime research, intelligence-gathering and even potentially repelling enemy drone swarms.”

“The autonomous ship, known as Zhu Hai Yun, was launched on May 18 in Guangzhou and travels at a top speed of 18 knots. Most of the 88.5-meter-long ship is an open deck for storing, launching and recovering flying drones.”

“It is also equipped with launch and recovery equipment for aquatic drones. Moreover, the ship’s drones are reportedly capable of forming a network with which to observe targets. Its AI operating system allows it to carry 50 flying, surface and submersible drones that can launch and recover autonomously.”

“According to the Chinese state-run Science and Technology Daily, the ship will be deployed to carry out marine scientific research and observation and serve various roles in marine disaster prevention and mitigation, environmental monitoring and offshore wind farm maintenance.”

“Dake Chen, director of the Southern Marine Science and Engineering Guangdong Laboratory that owns the ship, told media that ‘the vessel is not only an unprecedented precision tool at the frontier of marine science, but also a platform for marine disaster prevention and mitigation, seabed precision mapping, marine environment monitoring, and maritime search and rescue.”’

Police VR Training: Empathy Machine or Expensive Distraction? By Mack DeGeurin

“‘I just wish I could save them all,’ my virtual reality police officer avatar says as he gazes upon a young woman’s abandoned corpse lying beside a back-alley dumpster. My VR cop partner offers a limp gesture of condolence but doesn’t sugarcoat the reality: My decision got this woman killed.”

“I made the incorrect, deadly choice during an hour-long demo of Axon’s VR offerings earlier this month. The company, which created the Taser and now claims the lion’s share of the cop body camera market, believes the techniques practiced in these VR worlds can lead to improved critical thinking, de-escalation skills, and, eventually, decreased violence. I was grappling with the consequences of my decision in the Virtual Reality Simulator Training’s ‘Community Engagement’ mode, which uses scripted videos of complicated scenarios cops might have to respond to in the real word.”

“Experts on policing and privacy who spoke with Gizmodo did not share Chin’s rosy outlook. They expressed concerns that Axon’s bite-sized approach to VR training would limit any empathy police officers could build. Others worried bias in the VR narratives would create blind spots around truly understanding a suspect’s perspective. Still others said Axon’s tech-focused approach would do nothing to reduce the overall number of times police interacted with vulnerable people—an expensive, unnecessary solution.”

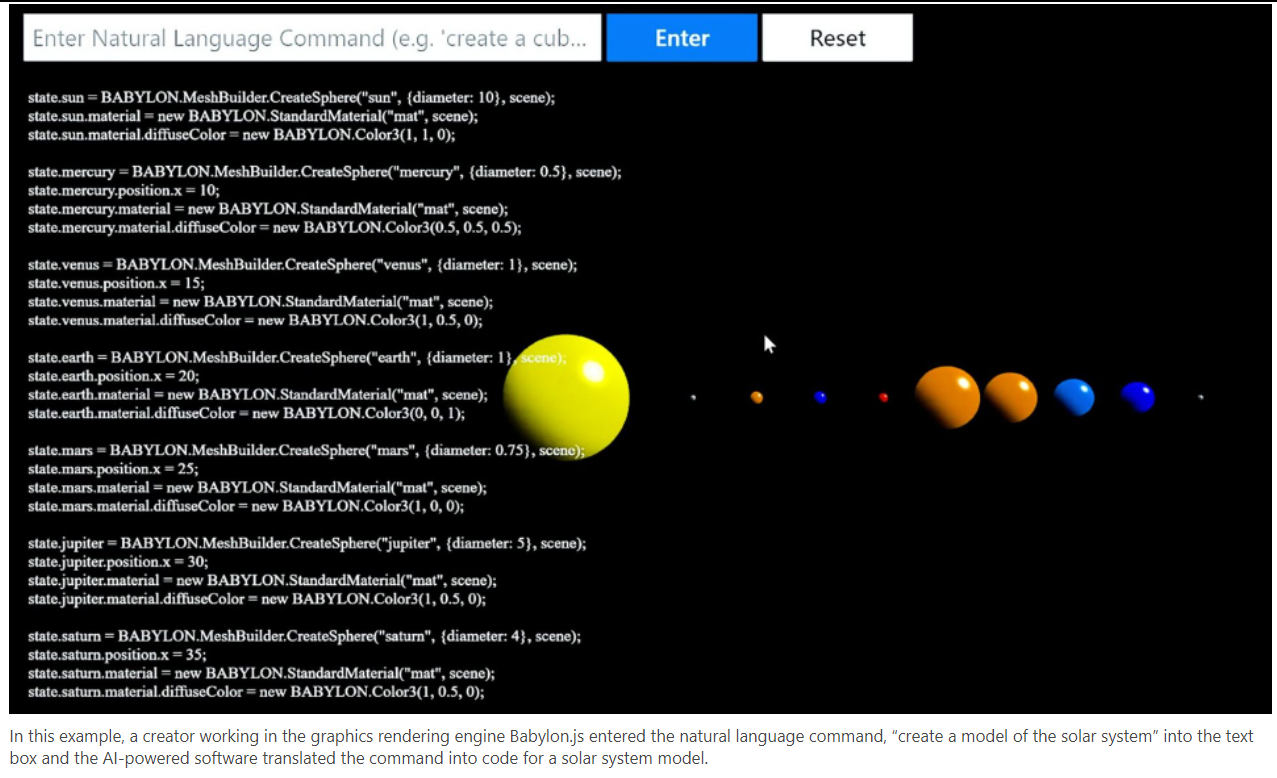

How AI Makes Developers’ Lives Easier, and Helps Everybody Learn to Develop Software, by John Roach

“Ever since Ada Lovelace, a polymath often considered the first computer programmer, proposed in 1843 using holes punched into cards to solve mathematical equations on a never-built mechanical computer, software developers have been translating their solutions to problems into step-by-step instructions that computers can understand.”

“That’s now changing, according to Kevin Scott, Microsoft’s chief technology officer.”

“Today, AI-powered software development tools are allowing people to build software solutions using the same language that they use when they talk to other people. These AI-powered tools translate natural language into the programming languages that computers understand.”

“‘That allows you, as a developer, to have an intent to accomplish something in your head that you can express in natural language and this technology translates it into code that achieves the intent you have,’ Scott said. ‘That’s a fundamentally different way of thinking about development than we’ve had since the beginning of software.”’

“This paradigm shift is driven by Codex, a machine learning model from AI research and development company OpenAI that can translate natural language commands into code in more than a dozen programming languages.”

You Can Practice for a Job Interview With Google AI, by J. Fingas

“Never mind reading generic guides or practicing with friends — Google is betting that algorithms can get you ready for a job interview. The company has launched an Interview Warmup tool that uses AI to help you prepare for interviews across various roles. The site asks typical questions (such as the classic ‘tell me a bit about yourself’) and analyzes your voiced or typed responses for areas of improvement. You'll know when you overuse certain words, for instance, or if you need to spend more time talking about a given subject.”

“Interview Warmup is aimed at Google Career Certificates users hoping to land work, and most of its role-specific questions reflect this. There are general interview questions, though, and Google plans to expand the tool to help more candidates. The feature is currently only available in the US.”

“AI has increasingly been used in recruitment. To date, though, it has mainly served companies during their selection process, not the potential new hires. This isn't going to level the playing field, but it might help you brush up on your interview skills.”

Pixel by Pixel: How Google Is Trying to Focus and Ship the Future, by David Pierce

“At I/O 2019, onstage at the Shoreline Auditorium in Mountain View, California, Rick Osterloh, Google’s SVP of devices and services, laid out a new vision for the future of computing. ‘In the mobile era, smartphones changed the world,’ he said. ‘It’s super useful to have a powerful computer wherever you are.’ But he described an even more ambitious world beyond that, where your computer wasn’t a thing in your pocket at all. It was all around you. It was everything. ‘Your devices work together with services and AI, so help is anywhere you want it, and it’s fluid. The technology just fades into the background when you don’t need it. So the devices aren’t the center of the system — you are.’ He called the idea ‘ambient computing,’ nodding to a concept that has floated around Amazon, Apple, and other companies over the last few years.”

“One easy way to interpret ambient computing is around voice assistants and robots. Put Google Assistant in everything, yell at your appliances, done and done. But that’s only the very beginning of the idea. The ambient computer Google imagines is more like a guardian angel or a super-sentient Star Wars robot. It’s an engine that understands you completely and follows you around, churning through and solving for all the stuff in your life. The small (when’s my next appointment?) and the big (help me plan my wedding) and the mundane (turn the lights off) and the life-changing (am I having a heart attack?). The wheres and whens and hows don’t matter, only the whats and whys. The ambient computer isn’t a gadget — it’s almost a being; it’s the greater-than-the-sum-of-its-parts whole that comes out of a million perfectly-connected things.”

Google Will Let You Talk to Assistant on the Nest Hub Max Just by Looking at the Screen, by Jay Peters

“The Nest Hub Max’s latest updates let you use Google Assistant without having to say ‘Hey Google’ ahead of every request.”

“One of the ways that’ll work is with a new feature Google calls ‘Look and Talk.’ Once it’s turned on, you’ll be able to look at the Nest Hub Max’s display and ask a question, no ‘Hey Google’ prompt required. The feature could be a handy way to save some time when you’re already looking at a Nest Hub Max’s screen — I could see it being a useful way to ask for recipes.”

“Look and Talk is an opt-in feature, and you’ll need to have both Google’s Face Match and Voice Match technologies turned on to use it, according to a blog post from Sissie Hsiao, Google’s vice president of Assistant. Audio and video from the Look and Talk interactions will be processed on the device. Look and Talk will be available first on the Nest Hub Max in the US.”