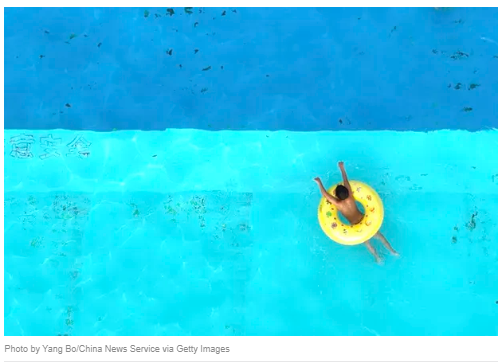

“The French government has collected nearly €10 million in additional taxes after using machine learning to spot undeclared swimming pools in aerial photos. In France, housing taxes are calculated based on a property’s rental value, so homeowners who don’t declare swimming pools are potentially avoiding hundreds of euros in additional payments.”

“The project to spot the undeclared pools began last October, with IT firm Capgemini working with Google to analyze publicly available aerial photos taken by France’s National Institute of Geographic and Forest Information. Software was developed to identify pools, with this information then cross-referenced with national tax and property registries.”

“The project is somewhat limited in scope, and has so far analyzed photos covering only nine of France’s 96 metropolitan departments. But even in these areas, officials discovered 20,356 undeclared pools, according to an announcement this week from France’s tax office, the General Directorate of Public Finance (DGFiP), first reported by Le Parisien.”

“As of 2020, it was estimated that France had around 3.2 million private swimming pools, but constructions have reportedly surged as more people worked from home during COVID-19 lockdowns, and summer temperatures have soared across Europe.”

“Ownership of private pools has become somewhat contentious in France this year, as the country has suffered from a historic drought that has emptied rivers of water. An MP for the French Green party (Europe Écologie les Verts) made headlines after refusing to rule out a ban on the construction of new private pools. The MP, Julien Bayou, said such a ban could be used as a ‘last resort’ response. He later clarified his remarks on Twitter, saying: ‘[T]here are ALREADY restrictions on water use, for washing cars and sometimes for filling swimming pools. The challenge is not to ban swimming pools, it is to guarantee our vital water needs.”’